Why MIT researcher is asking for ‘set of rules justice’ towards researcher AI Besses

Ideas53:59A rally cried for ‘algorithm justice’ in front of AI bias

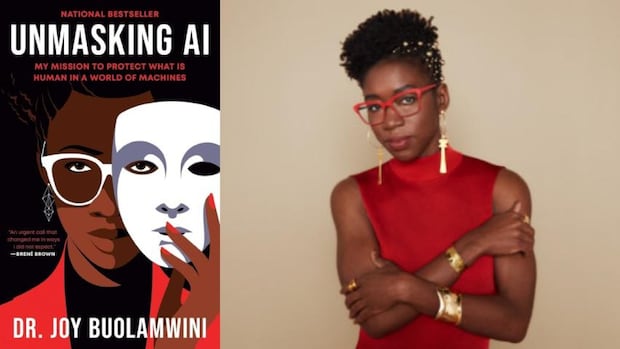

Joy Bulmwini Artificial Intelligence is at the forefront of research, in many ways the AI system has damaged through racial bias, gender bias and competence. He is the founder of the Elgorithm Justice League, an organization working to make AI accountable.

“The growing marginal for civil rights will require algorithm justice. AI must be for more people, not just some privileged,” Bullamwini.

His research as a graduate student in MIT inspired him to call Microsoft, IBM, Amazon and other technical giants – whose face identification system failed to identify people of color. The worst results were related to dark women. To make cases worse, this flawed facial identification software was already in use by corporations and law enforcement agencies.

She first discovered the boundaries of facial detection as she was working on a creative computing project.

“Face detection was not really detecting my face until I put it on a white mask. It was Halloween Time, I was a white mask. Pulled on the white mask, the face of the white mask is detected. Take it away, take it away, my dark face, human face, real face, don’t know when I am going here?”

In later years, she has been a fierce lawyer to fix algorithm bias, which she says that is a problem that will spend a lot of society, if it is not addressed.

Here is a part of Joy Buolamwini’s Rubensinstein lecture, which was given in the Sanford School of Public Policy at Duke University in February 2025.

“Hands showed. How many men have heard of gaze? White gaze? Postcolonial stare?

“For that lakesicon, I add coded gaze, and this is really a reflection of power. Who has the power to shape priorities, preferences – and sometimes, perhaps, perhaps, perhaps not intentionally – prejudice that are embedded in technology?

“I first faced coded gaze as a graduate student working on an art installation … I really had to place on a white mask that my dark skin was detected. My friend, not so much. It was my first encounter with coded gaze.

“I shared the story of coding in a white mask on the TEDX platform. So many people saw it. So I thought, you know what people can do? People want to check my claims – let me check myself.”

https://www.youtube.com/watch?v=ug_x_7g63ry

“I took my TEDX profile image, and I started running it from different companies through online demo. And I found that some companies did not find out my face at all. And those who did me wrong as men. So I wondered if it was just my face or other people.

“So it is a black history month (lecture was recorded in February 2025). I was excited to run some artists from Black Panther. There is no idea in some cases. There is a misunderstanding in other cases … you have angela baiceet – he is 59 in this picture. IbM is saying from 18 to 24.

“What I got worried was moving beyond fictional characters and thinking of ways in which AI, and especially AI field facial identity, is visible in the world.

“Leading for things like false arrest, non-conscious deep fake as well as clear imagination. And it affects everyone, especially when you have companies like Clearview AI, scrapping billions of pictures courtesy of social media platforms. It is not that we have allowed them, but it is what they have done.

“Therefore, as we think we are in this stage of AI development, I often think of exoded – represents anyone exoded who is condemned, convicted, exploited, otherwise damaged by the AI system.”

“I think about people like Porch Woodroff, who was eight months pregnant, when she was arrested incorrectly due to incorrect identity. She even reported to be a contraction while she was being held. What is crazy for me about her story, a few years ago, the same police department had wrongly arrested Robert Williams in front of the same police department and his wife.

“So this is not a case where we did not know that issues were right. But in some cases it was the negligence of will power to continue using systems that have been shown for all types of harmful prejudices again. These algorithms of discrimination remain. And this is a way you can be exoded.”

“Another way we have algorithms of monitoring.

“Some of you, as you are flying homes for holidays or other places, you are probably starting to see the face of the airport crawling.

“And then you have algorithms of exploitation. Celebrity will not save you. Liters skin will not save you. We have seen with the rise of generic AI system, the ability to make deep fakes and replicate people, whether it is a clear picture of Taylor Swift or Tom Haix, which you have not heard of a dental, no.”

Download Idea podcast To listen to the full episode.

*Edited fraction for clarity and length. This episode was created Sean blo.